GoldenGate – The Bridge to Everywhere

Oracle GoldenGate has generated a lot of buzz in the Oracle community since the software was purchased by Oracle in 2009. It is marketed by Oracle as a niche application, but one that can be of use in nearly any Oracle environment. Additionally, Oracle has made it clear that they expect GoldenGate to be the chief replication method in the future instead of Streams of Advanced Replication.

The reasoning behind this is simple: GoldenGate is a true heterogeneous replication solution that is not tied directly to the Oracle software. Using GoldenGate it is possible to replicate from nearly any database to any other database. Available platforms include: DB2, MySQL, Oracle, SQL/MX, SQL Server, Sybase, Teradata, TimesTen, flat files, and many other platforms via Java. The software is capable of replicating in near real time or on a lag, and can take a single source and pump it to multiple destinations. GoldenGate is like the highway system for databases, allowing quick transfers to and from any location with many extra destinations easily available.

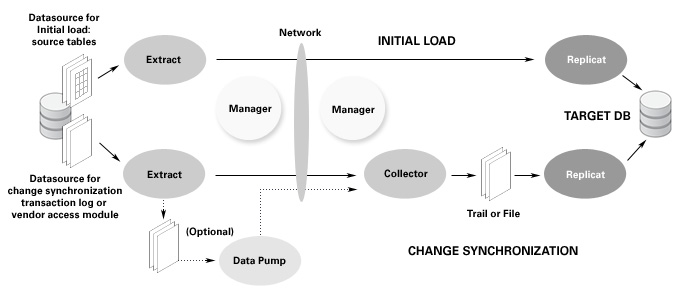

GoldenGate accomplishes these tasks through a simple set of processes distributed around a centralized messaging service for communication. Before we dive into how GoldenGate can be used in your Oracle environment, let’s go through the components of GoldenGate to get a better understanding of the software.

The Extract Process

The first step in any replication process is to capture changes on the source environment. GoldenGate accomplishes this via Extract processes that are able to read transaction logs on the source system and write change data into a trail file (we will cover this next). Multiple Extract processes can be configured which pull different sets of data. This data can be pulled constantly (24×7 replication), on a scheduled basis, or even configured as an event-based system where capturing of data begins when certain data changes are made.

By reading from transaction logs, GoldenGate is capable of recording changes with very minimal overhead to the source system. Even better, it is possible to use an Oracle 11g Active DataGuard environment as a GoldenGate source, which means that the source system is barely impacted at all.

The Extract process is also capable of using stored data as a source instead of transaction logs. This is mostly useful for making an initial copy of a database to a target environment.

Trail Files

The Trail is where all extracted changes are stored. It holds a combination of DDL and DML. Trail files can be designated as either a local trail that stays on the same server as the extract, or a remote trail which is sent via TCP/IP to a target system.

GoldenGate maintains the trail files; the main responsibility of the DBA is to initially set up the storage and locations for the files. As data is extracted, it is written to the trail files. As the files fill up, new trail files are formed and the data keeps writing. As data is consumed, GoldenGate is capable of purging old trail information.

Oracle documentation recommends using separate disk for the trail file to guarantee GoldenGate processing is fast and does not contend with other resources on the environment. Even with fast systems and purging turned on, the trail file will need room to grow particularly in the event of a network outage. In order to know how much space is required, Oracle recommends the following formula:

(log volume in one hour) * (max number of hours network downtime) x 0.4 = trail space

This is based on Oracle’s estimate (page 6) that the GoldenGate Extract will actually record only 40% of data in redo logs. One of the things that makes GoldenGate so fast is the limited amount of data required for Extract; for instance, GoldenGate only extracts committed transactions whereas the redo logs hold all transactions whether committed or uncommitted.

Trail files are almost always written remotely for the purposes of replication. However, the Extract can store trails locally so that they can be picked up by another process called a Data Pump that will send the trail data to multiple destinations. If a Data Pump is not used, all written data will be on the target system and no storage is required for trail files on the source environment.

Collectors

The Collector runs on the target system and receives trail file information to be written to disk. As data is extracted and sent over the network, the Collector will place the data in the proper files to be picked up by the Replicat process.

Replicat

The Replicat process consumes data from trail files on the target system. This process is able to parse all trail information, run DDL and DML against the target environment, and keeps track of written records into an area called the checkpoint table. In order to maintain consistency and guarantee data is picked up properly after errors, the checkpoint table records the progress of replicat processes as data is consumed.

Replicats are capable of loading large sums of data at once or in a synchronized configuration. For instance, one replicat can be created to do an initial data load from a source environment, and another can be created to continue synchronizing changes once the initial load is complete.

If the replicat process receives errors, it will record its progress and abort. In GoldenGate, the act of aborting the process due to error is called abending. If a process is abended, it has aborted due to error and must be fixed.

Usage Overview

GoldenGate uses these components in order to build a complete replication environment. Extract processes pull data and write to trail files which are processed by collector processes and consumed by replicat processes. The real magic behind GoldenGate happens by exploiting this configuration in various ways:

- Multiple extract processes can pull different sets of data in parallel and broadcast it to multiple targets.

- Single extract processes can pull data and broadcast via Data Pumps

- Multiple systems can extract to a single target where multiple replicat processes can consolidate data

- Use multiple database types in any combination for the source(s) or target(s)

- Filter and/or map data en route to the target for custom data requirements

In this way, GoldenGate can be used to completely replace even the most vigorous replication or ETL processing structure. With these capabilities in mind, let’s talk about some of the ways GoldenGate could be used in the average Oracle environment.

Making the Most of GoldenGate

The GoldenGate installation is easy enough that it can be deployed on any system. It works on Unix, Linux, and Windows and transmits data over TCP/IP. The software itself is self-contained and can literally be set up within minutes. So with all this ease of use, how can we put it to work?

Simplified Extract, Transform, and Load (ETL)

Oracle has many options for ETL and ELT. Most notable is Oracle Data Integrator (ODI), which now includes integration with GoldenGate for enhance change synchronization. ODI utilizes a highly efficient ELT process to collect multiple sets of data, load to a staging location, then transform the data for real-time warehousing. If you’re interested in learning more about ODI, this blog has some great information.

Even without ODI, GoldenGate is easily usable for the aggregation of data for warehouse loading. Extract processes can pull data simultaneously and in real-time from Oracle, SQL Server, AS/400 environments, XML, flat files, and many more sources. All of this data will be written as multiple trail files to the target environment that will then be written to the warehouse (again, on any platform) by replicat processes.

Using this method, GoldenGate can even filter and clean the data during the process in order to achieve the bulk of ETL work in a single set of steps. Or if you prefer, the data can simply be aggregated then cleaned by other methods on the target system for ELT.

Data Distribution

With the use of Data Pumps to send single data sources to multiple locations, it is very easy to guarantee that data across your enterprise is consistent and reliable. The extract process writes data to a local trail file that is picked up by a data pump. The data pump in turn sends this information to multiple destinations (near or far) that are collected and written to the target environment via the replicat. In this modern era of disk storage, data redundancy such as this eliminates the need for costly database links and instead allows real-time access to remote data by making use of data duplication rather than network reads.

In fact, the ‘real-time’ buzzword is huge with GoldenGate, and Oracle is using it as much as possible. The core theme behind GoldenGate is real-time access to real-time data; that is, the data you require will be accessible immediately and will always be up to date. Replacing database links with synchronized copies will improve performance on many systems tremendously as long as the data is guaranteed to be fresh.

Refresh Development Servers

One of the biggest annoyances for many DBAs is refreshing development systems with up to date data. GoldenGate is formed of reusable components, and it is very easy to extract and replicat initial data loads. By creating these processes in GoldenGate once, a DBA can initiate easy on-demand refreshes of development environments.

By using data pumps, the DBA can use a single extraction and use it to populate multiple development environments, individual environments for different development teams, DBA sandboxes, or development/QA environments in parallel. These environments can be spread across multiple operating systems, database systems, or even versions. Testing on 11g (or 12c soon) will be a breeze due to the ability to seamlessly replicate.

While it may not be as fast as solutions like Delphix, it is a valid method of refreshing via replication.

Upgrades

The capability to move between versions introduces another logical use of GoldenGate: upgrading to new releases. GoldenGate can replicate seamlessly from server to server or locally and can be used to duplicate a database onto a new version using existing processing configurations.

For instance, if GoldenGate is used to replicate data between a 10g database and another 10g database, an upgrade is as simple as stopping replication to the target system temporarily, upgrading to 11g, then starting replication again. GoldenGate operates outside of the data dictionary and can seamlessly work between these versions. The same goes for moving to another server or even to another operating system.

Rolling Development into Production

Multiple extracts and replicats can be easily configured to pull development data and merge it with production tables. Alternatively development data can be pulled from multiple locations, or development and production data can be pulled from their respective databases and merged into a new environment. Whichever way you choose, the configurations for the extracts and replicats can be re-used to perform these operations on a regular basis.

Migrating 3rd Party Utility Databases

Some 3rd party utilities only allow certain database platforms. If you have a 3rd party application which is only designed to run on MySQL or SQL Server for instance, the data from this system can be send to an Oracle database for reporting or historical record keeping. On some 3rd party utilities it may even be possible to integrate data from other systems into the 3rd party database. With Java connectors, it is even possible to load data into NoSQL options like MongoDB or Riak with the right coding.

Using Business Data in SharePoint

Many companies have started using SharePoint or other portal tools for their corporate infrastructure. Using GoldenGate, information from financial, customer, HR, or other databases can easily be integrated into the company portal no matter which server houses the SharePoint database.

Trouble Ticketing Systems and Monitoring Tools

Using GoldenGate, it is possible to migrate data from trouble ticketing systems over to monitoring environments. For instance, if your company uses a ticketing tool to receive trouble tickets on a customer-facing application and a monitoring environment for IT personnel, GoldenGate can act as a messaging system by copying ticket information into the monitoring environment. Alternatively the flow could be reversed, and monitoring information could be used to supply extra information on tickets.

Master Data Management (MDM)

The central component to many distributed systems, MDM is becoming more and more popular in large corporate environments. MDM solutions are central databases which hold key corporate data in an attempt to always ensure accurate information across distributed environments. For instance, if a company makes use of five different databases and applications which always requires information about employees, an MDM environment could be a central aggregation point for employee data which can then be used in the external environments.

In these types of setups, GoldenGate can be used both for supplying MDM data into other databases and for loading MDM data. Data pumps from the MDM central repository can spread information to all subscribing databases, and applicable information from databases in the enterprise can ship information to MDM for central storage.

Conclusion

Oracle GoldenGate 11g is poised to make big changes in the way data is moved between environments. While replication has existed for a long time, GoldenGate is the first highly available real-time replication tool with full heterogeneous support across many platforms. With the extensible extract/replicat design it is easy to deploy and reuse on many different systems for many needs. While there are many other viable replication options out there, Oracle GoldenGate is a very strong contender for enterprise usage.

Note: This article originally appeared in IOUG SELECT Journal Q4 2011.